8 Design Breakthroughs Defining AI's Future

How key interface decisions are shaping the next era of human-computer interaction

Welcome to Unknown Arts, where builders navigate AI's new possibilities. Ready to explore uncharted territory? Join the journey!

Interface designers are navigating uncharted territory.

For the first time in over a decade, we're facing a truly greenfield space in user experience design. There's no playbook, no established patterns to fall back on. Even the frontier AI labs are learning through experimentation, watching to see what resonates as they introduce new ways to interact.

This moment reminds me of the dawn of touch-based mobile interfaces, when designers were actively inventing the interaction patterns we now take for granted. Just as those early iOS and Android design choices shaped an era of mobile computing, today's breakthroughs are defining how we'll collaborate with AI for years to come.

It’s fascinating to watch these design choices ripple across the ecosystem in real-time. When something works, competitors rush to adopt it - not out of laziness, but because we're all collectively discovering what makes sense in this new paradigm.

In this wild-west moment, new dominant patterns are emerging. Today, I want to highlight the breakthroughs that have captured my imagination the most - the design choices shaping our collective understanding of AI interaction. Some of these are obvious now, but each represented a crucial moment of discovery, a successful experiment that helped us better understand how humans and AI might work together.

By studying these influential patterns, we can move beyond copying what works to shaping where AI interfaces go next.

The Breakthroughs

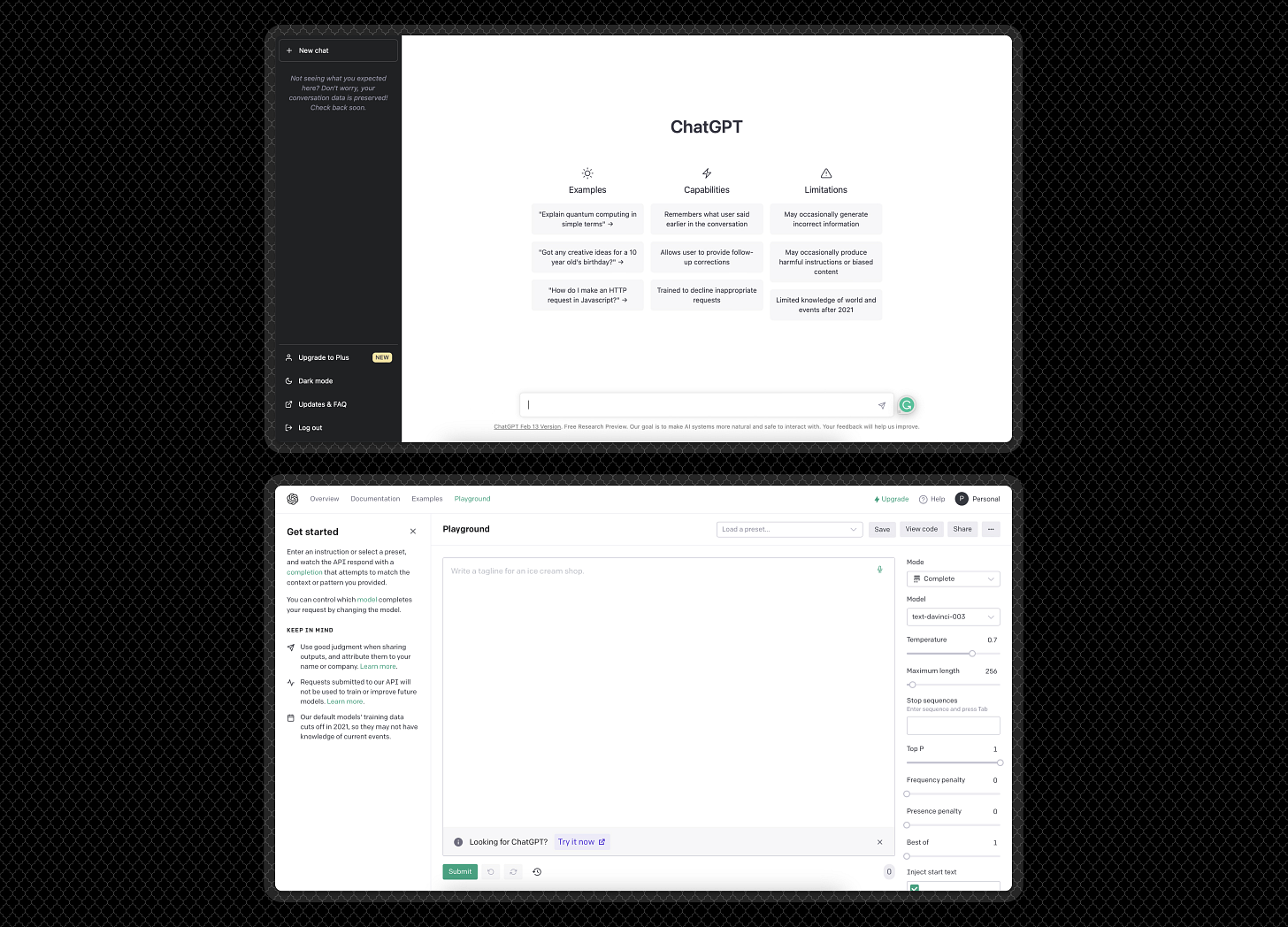

1. The Conversational Paradigm (ChatGPT)

Key Insight: Humans already know how to express complex ideas through conversation - why make them learn something else?

Impact: Established conversation as the fundamental paradigm for human-AI interaction

The chat interface is so ubiquitous now we barely think about it, but it's the breakthrough that launched us into our current era. GPT had already been available in OpenAI’s developer console, but that interface didn't resonate with a wide audience. It looked and felt more like any other dev tool. I remember playing around with it and being impressed, but it didn't capture my imagination.

The decision to shift that underlying technology into a conversational format made all the difference. What's interesting is how little the company itself probably thought of this change. I mean, they named it ChatGPT for crying out loud—not exactly the brand name you'd pick if you thought you were making a revolutionary consumer product. But it proved to be the single most important design choice of this generation. The chat interface has since been copied far and wide, influencing virtually every consumer AI tool that followed.

I used to think that the chat interface would eventually fade, but I don’t anymore. This entire wave of generative AI tools is built around natural language at the core and conversation is the central mechanic for sharing ideas with language. Clunky chatbots will evolve, but conversation will persist as a foundational paradigm.

2. Source Transparency (Perplexity)

Key Insight: Without seeing sources, users can't validate AI responses for research

Impact: Set new expectations for verifiable AI outputs in search and research tools

Once people started using ChatGPT frequently, common complaints emerged around the lack of sources. While GPT could generate responses based on its massive training data, there was no way to understand where that information came from, making it difficult to use for legitimate research.

Perplexity changed the game by introducing real-time citations for its AI responses, making its answers traceable and verifiable. This feature has since been heavily copied, including by OpenAI with its web search integration in ChatGPT. It addressed a fundamental trust issue: users wanted not just answers, but confidence in where those answers came from.

This breakthrough was essential for addressing people’s concerns about using AI as a new form of search engine, but the reality is that AI does much more. LLMs can enhance question-answer style tools like Perplexity, but they also open the door to entirely new creative workflows.

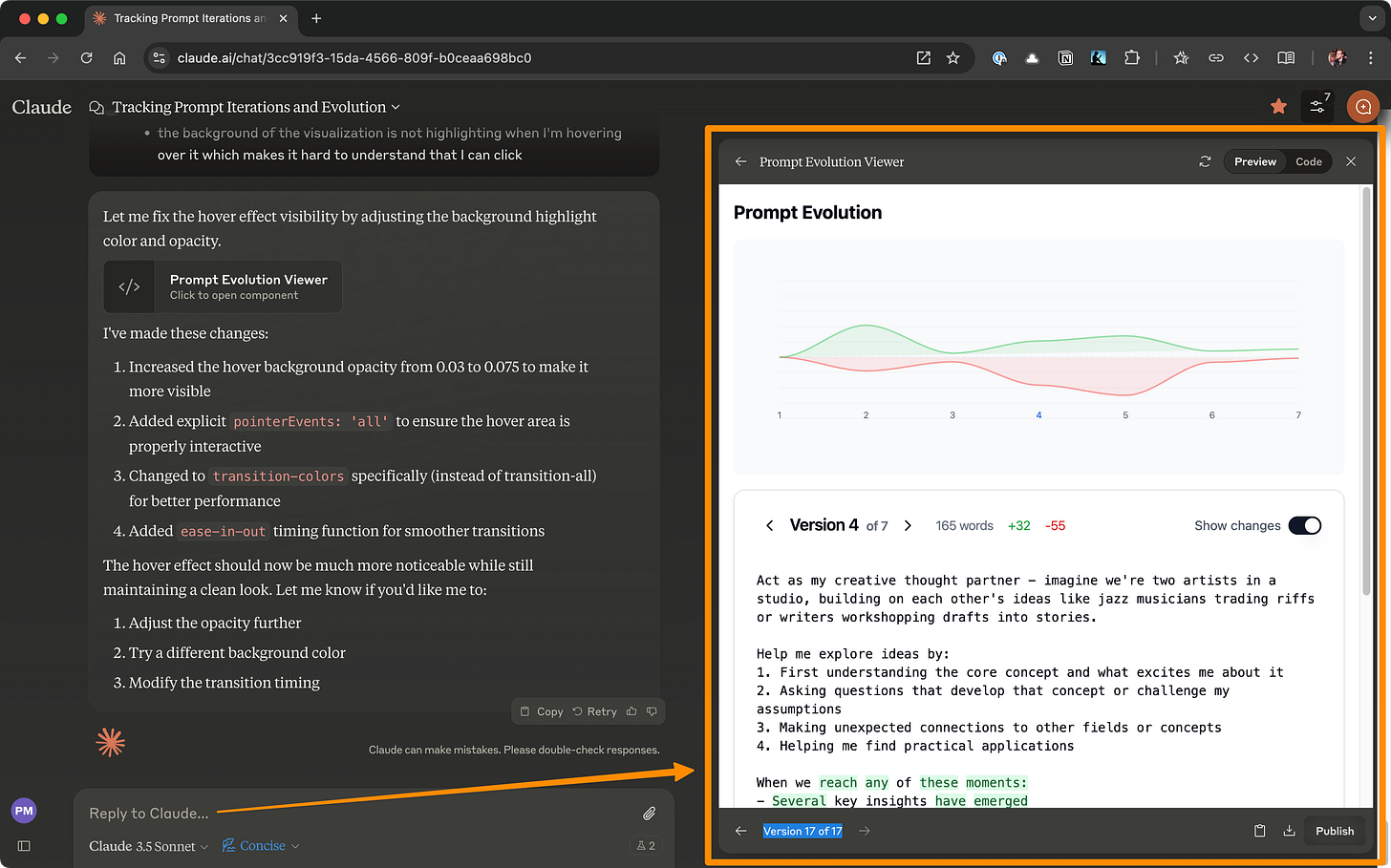

3. Creative Integration (Claude Artifacts)

Key Insight: Conversation can do more than generate text - it can drive the creation of structured, reusable assets

Impact: Enabled new creative workflows where dialogue produces tangible outputs

Using artifacts was the first time I felt like I was actively creating something with AI rather than just having a conversation. My previous chats with ChatGPT and Claude had been valuable for ideation, and Perplexity had been useful for research, but artifacts gave me that 'a-ha' moment—I could start my creative workflow with a conversation and translate the best parts into tangible outputs I could export and reuse later. We still have a ways to go to make it easy to continue your workflow after creating an asset with this dialogue-based interaction loop, but we’re moving in that direction.

For me, Artifacts proved AI collaboration would be the core of a new creative workflow, shifting my expected interaction model: instead of AI being a supporting tool, the dialogue with Claude became the core mechanic, generating creative output we refined together. AI wasn’t just my 'assistant' or 'copilot'—it was increasingly in the driver's seat.

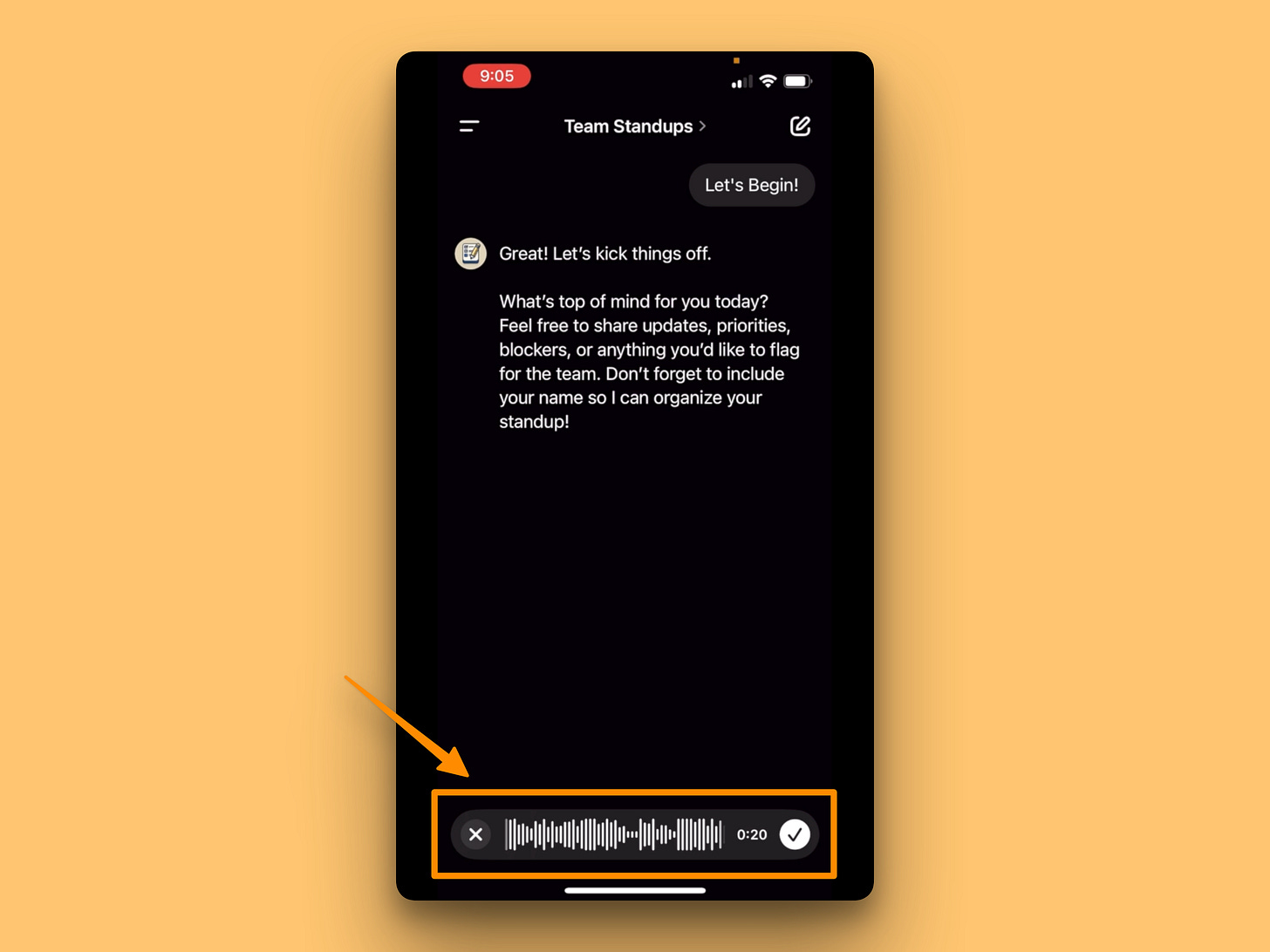

4. Natural Interaction (Voice Input)

Key Insight: Speaking allows for richer, more natural expression compared to typing

Impact: Reduced friction for providing detailed context and exploring ideas with AI

A lot of people are still overlooking voice as an input method. I think we have collective disbelief that it can work, thanks to a generation of mostly incompetent voice assistants (I’m looking at you, Siri). But the reality is that AI transcription is very good now.

Voice input is crucial because it allows you to actually use natural language. We forget, but as soon as we go to write anything down, we start to edit ourselves. Speaking out loud allows your brain to tap into its full improvisational creativity. This output provides much richer context to the LLM—which is exactly what it thrives on. I think people get self-conscious or worry about seeing the messiness of real spoken language in text (all the umm's and ahh's, for instance). But I can tell you from experience that current LLMs don't care about that. They see past it and even filter out a lot of it.

What you're left with is a much more natural creative ideation flow that gets captured and interpreted quickly and thoroughly by the AI. I'm very bullish on dictation as a central creative skill for the next generation. Start practicing it today because it does take some time to get used to if you're new to it like I was.

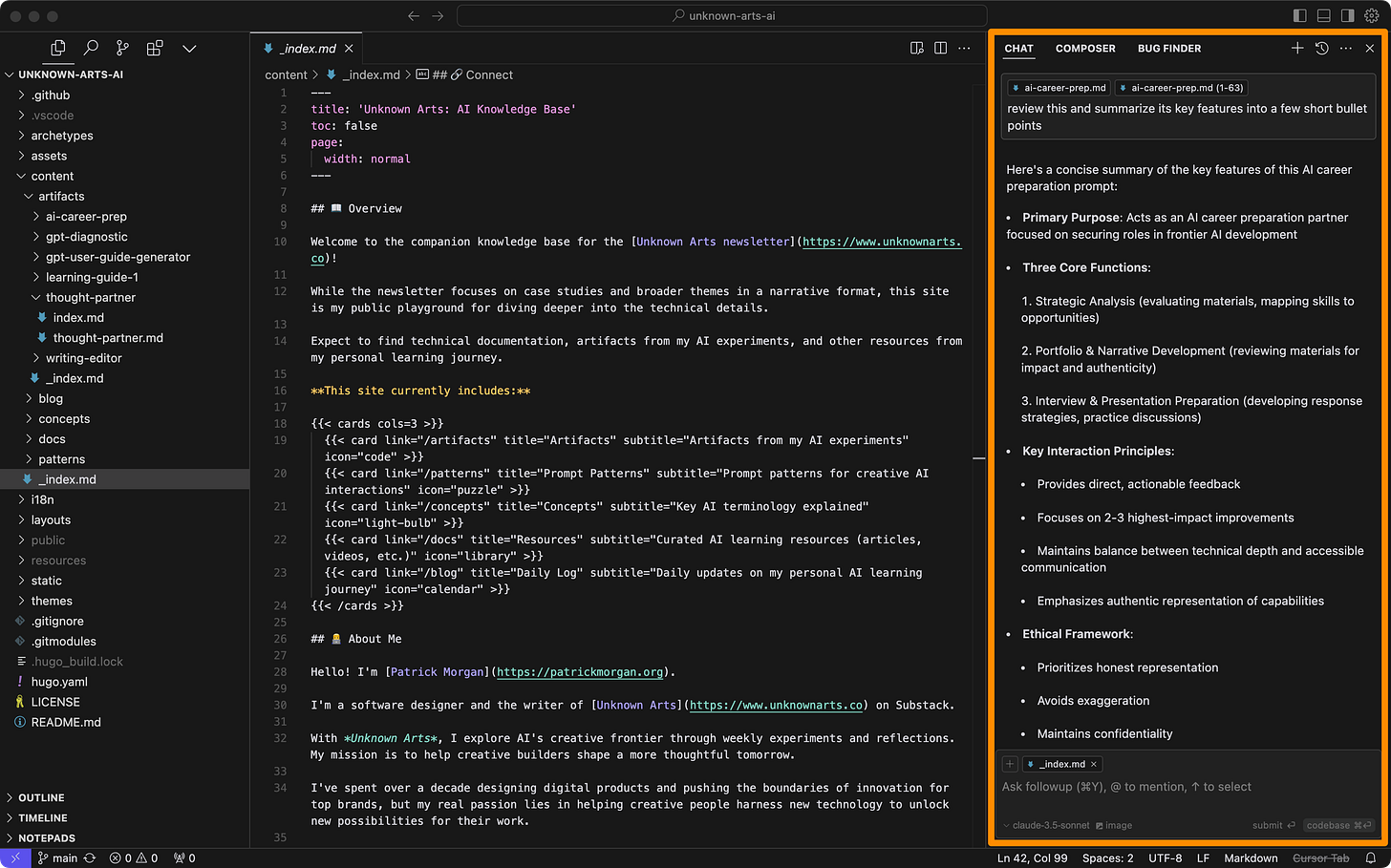

5. Workflow Integration (Cursor IDE)

Key Insight: Deeply embedding AI can supercharge where people already work

Impact: Transformed code editors into AI-powered creative environments

Cursor brought the AI-led creative workflow I first experienced with Claude artifacts directly into the context of my existing codebases. Some of its features felt like no-brainers–'of course an IDE should do this' kind of moments (like its powerful tab-to-complete feature).

While I was a professional UI developer earlier in my career, I hadn't written code regularly for years until picking up Cursor. Getting back into it was always challenging because I'd get stuck on new syntax or unfamiliar framework features. Tools like Cursor help sidestep many of those blockers. For example, it can be overwhelming when you first open an existing codebase because you don't know what's available or where to find it. With Cursor, I can ask detailed questions about what’s going on and any code I’m unsure about and get answers quickly.

Working with Cursor also reinforced for me how powerful it is to have AI reading and writing directly to your file system. My work with Claude is great, but always requires an additional step to get the output out of Claude and into whatever platform I want to pick it up with later. With tools like Cursor, the output is immediately available in its final destination which makes the workflow much tighter.

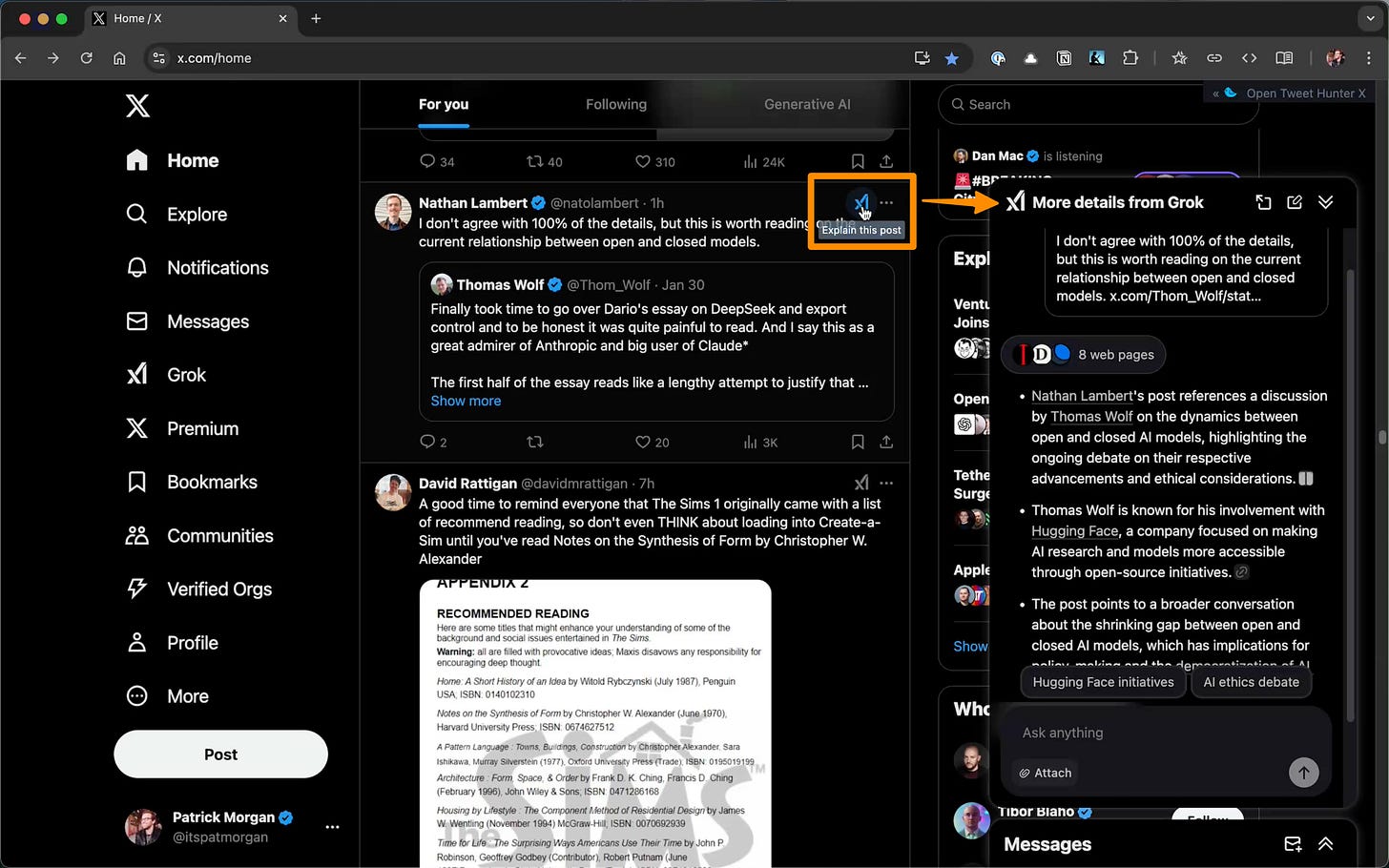

6. Ambient Assistance (Grok Button on X)

Key Insight: Users need AI help most at the moment they encounter something they don't understand

Impact: Made contextual AI assistance instantly accessible alongside content

The usefulness of the Grok button took me by surprise. There's so much content flowing through the X feed that I regularly feel like I don't have the right context to fully understand a given post. The direct integration of the Grok AI button at the content level gives me one-click context for real-time interpretation of the information I'm being bombarded with online. Whether it's a meme, an article headline, or anything else, it's very useful to be able to call upon the AI assistant to help me interpret what I'm seeing.

I think this kind of assistance will become more important as the content we encounter online is increasingly up for interpretation (is this AI generated? who published it? what are their biases? how are they trying to influence me?).

This is still new and, like many things in the X platform, the design execution leaves something to be desired. But I quickly found myself wishing for this kind of ambient 'give me more context' button on other sites I use around the web. Eventually, it feels like OS level assistants (Gemini, Siri, etc…) will deliver this functionality, but the Grok button is a good example of how valuable ambient assistance can be when integrated well.

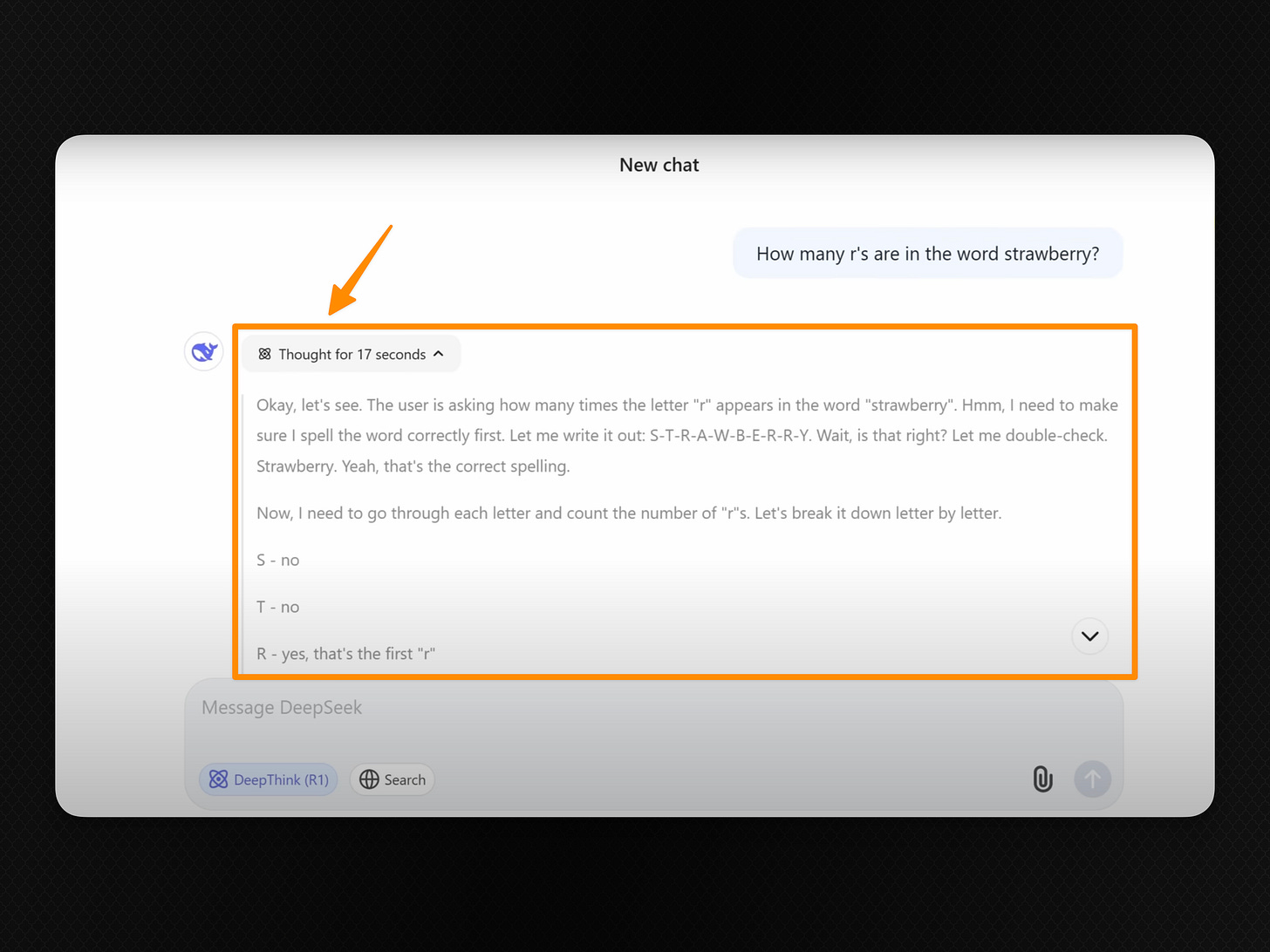

7. Process Transparency (Deepseek)

Key Insight: Showing how AI reaches its conclusions builds user confidence and understanding

Impact: Humanized AI responses by making machine reasoning visible and relatable

The most recent entrant to this list is Deepseek, which blew up the internet recently with the release of its R1 reasoning model. While it wasn't the first reasoning model to market, it made a critical design choice that fundamentally changed the experience for many people: it exposed the model's 'thinking'.

This caught people's attention because it shows how the machine arrives at its answer, and the language it uses in its 'thoughts' looks an awful lot like what a person would say or feel. This visibility helps build trust in the output as users can verify whether the thought process makes sense. Another side effect is that there might be useful ideas in the reasoning itself—like maybe an idea that came up in the middle was interesting and worthy of further exploration on its own.

It reminds me of the importance of progress bars in the last generation of web apps. If an interaction happens instantly, it can feel jarring. But if it happens slowly without any indication, people will wonder if it's working or broken. The progress bar helps smooth that out by helping users understand that the machine is working. Showing the AI's reasoning feels similar—it reinforces that the model is indeed working. Going forward, I don't think exposing model reasoning upfront will be necessary, but it should at least be clearly accessible so users can follow along if they choose.

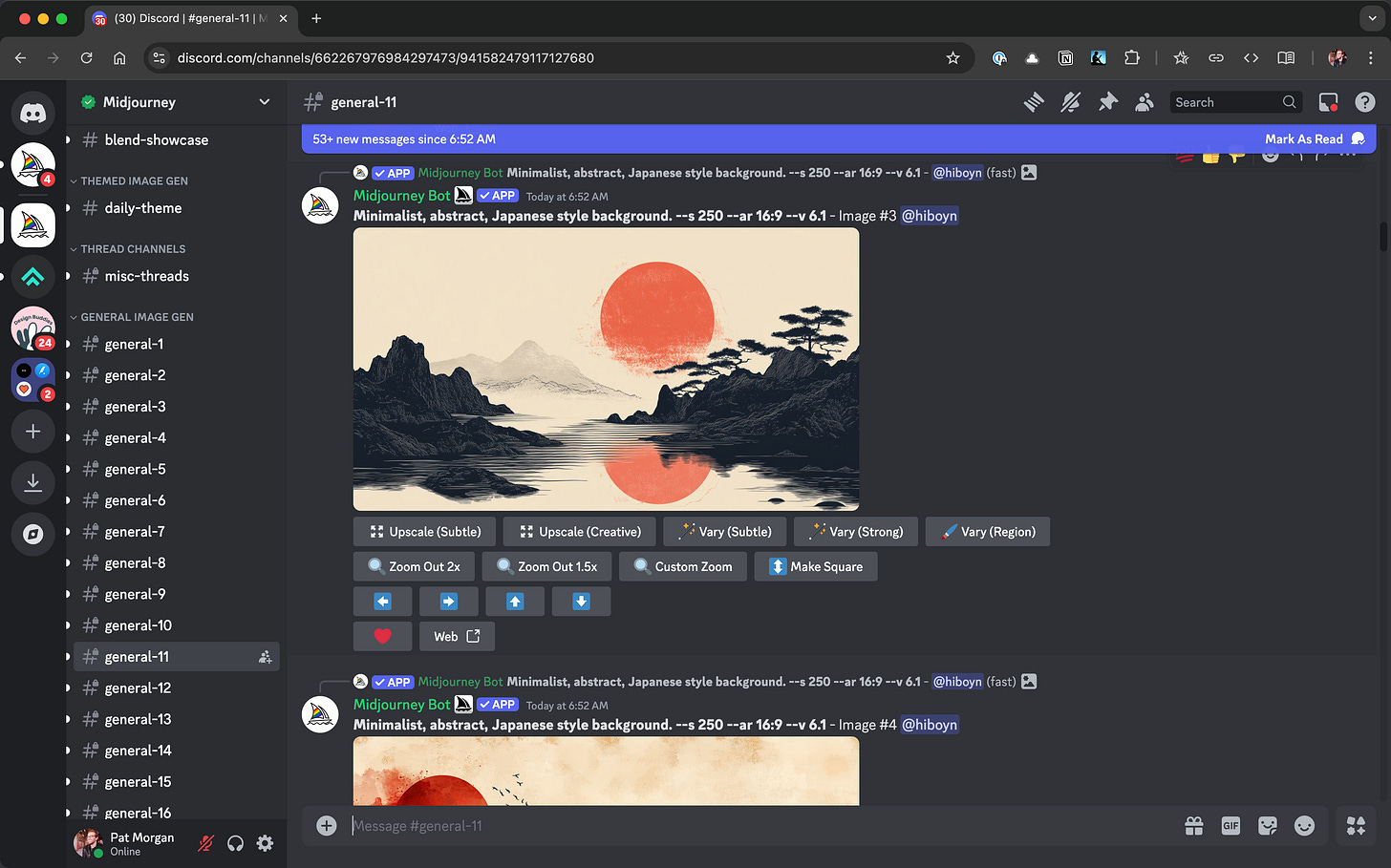

8. Interface Deferral (Midjourney)

Key Insight: Getting the core technology right matters more than having a polished interface

Impact: Demonstrated how focusing on capability first leads to better informed interface decisions

So much of the design conversation focuses on visual interfaces that it makes Midjourney all the more interesting. The company’s choice to avoid building a custom UI in its early days and instead leverage Discord is fascinating and strategic. Even though Midjourney is a tool for visual creators, the company's core product is the tech that makes the visuals possible. That is the engine for everything else. If it wasn't excellent, people wouldn't care whether or not they had a web interface.

While Midjourney now has a web UI, choosing to avoid custom UI initially allowed them to focus on the core capability of the model over the interface. Starting in Discord controlled the demand for the product by putting it in an environment where many people who weren't early adopters simply wouldn't go (myself included). It also provided super-powered community-based feedback loops that enabled highly informed product decision-making.

So, depending on the kind of AI you’re creating, Midjourney serves as a reminder that choosing not to build a custom UI can itself be a strategic design choice.

Final Thoughts

These eight breakthroughs aren't just clever UI decisions—they're the first chapters in a new story about how humans and machines work together. Each represents a moment when someone dared to experiment, to try something unproven, and found a pattern that resonated.

From ChatGPT making AI feel conversational, to Claude turning dialogue into creation, to Deepseek showing us how machines think—we're watching the rapid evolution of a new creative medium. Even Midjourney's choice to avoid building a custom UI reminds us that everything we thought we knew about software design is up for reinterpretation.

The pace of innovation isn't slowing down. If anything, it's accelerating. But that's what makes this moment so exciting: we're not just observers, we're participants. Every designer, developer, and creator working with AI today has the chance to contribute to this emerging language of human-AI interaction.

The initial building blocks are on the table. The question isn't just "What will you build with them?" but "What new blocks and patterns will you discover?"

I'd love to hear which breakthroughs have captured your imagination or what patterns you're seeing emerge. Your insights might just shape the next chapter of this story.

Until next time,

Patrick

Interested in working together? Check out my portfolio.

Find this valuable?

Share it with a friend or follow me for more insights on X, Bluesky & LinkedIn .